Latvian Potato Farmer DESTROYS AGI with FACTS & LOGIC

Artificial general intelligence (AGI) - AI that matches or surpasses human capabilities in intellectual tasks, also common scare-word for machine takeover.

Lately I’ve been swimming through several conversations on AGI/AI takeover/humans being battery-fied. It's like the old trading cliché: “If shoeshine boys are giving stock tips, then it’s time to get out of the market” - J. Kennedy. Similarly, if random conversations seem to steer toward AI dominance, you know the hype train’s about to derail.

So, if you’re thinkin', “What a mess we're in”, and it’s hard to know where to begin, let's armour you up with 8 points to shield you from people pushing the topic of AI sentience at your next cocktail party.

Financial Abyss to be Solved by an AI Money Printer

First of all, who benefits the most from talks on AGI? AI companies, of course! It's easier to justify the enormous costs they rack up by conveniently maintaining an optimistic course.

Sam Altman, CEO of OpenAI — one of the biggest players in AI — suggests that developing AGI requires at least $5 billion in new capital annually.

He’s even claiming that he needs money to develop AGI that will figure out how to return investors their money — the boldest large-scale “just trust me, bro” we’ve seen in a while.

Let that sink in—more than any startup has ever raised in history, perpetually. This isn’t just a gold pool; it’s wealth beyond Scrooge McDuck’s wildest dreams. Unprecedented.

…and then there are the AI evangelists wanting more eyes on their work, riding the popularity wave to get more eyeballs on their activities. Anyone particular comes to mind?

The Unpredictable Nature of AGI - Who or What is Steering the Wheel?

Who can tell what magic spells we'll be doin' for us? One of the massive challenges in developing AGI is predicting its behavior. The evolution of artificial sentience is like trying to guess the plot twists in a David Lynch film. This unpredictability makes it impossible to project a clear path towards AGI, in terms of both potential and behavior.

The question of safeguards and limitations arises. If we keep up the useless twisting of our new technology, can we guarantee its sentience won’t turn malevolent? If safeguards remain, how can we be sure the outputs don’t turn into perpetual apologies of “I am sorry, but I cannot generate the response for you as it goes against AICo3000’s use case policy. However, based on your browser data, you might be interested in [this product]….“ If we omit data on let’s say terrorisms, what if it draws its own conclusions and associations on unknown data?

Let’s remember that some smart people just decide to quit. Most famous case being, Russian mathematician Grigori Perelman, who solved one of the most difficult problems in mathematics and rejected all the awards and prize money, choosing to withdraw from the public eye and live an ascetic and hermit-like lifestyle. AGI might just decide to quit as soon as it achieves sentience 🤷

Perils of Extrapolation

Research shows how the evolution of large language models (LLMs) yields better results as they scale1. So, it seems AGI is just around the corner, right?

Well, let’s simplify.

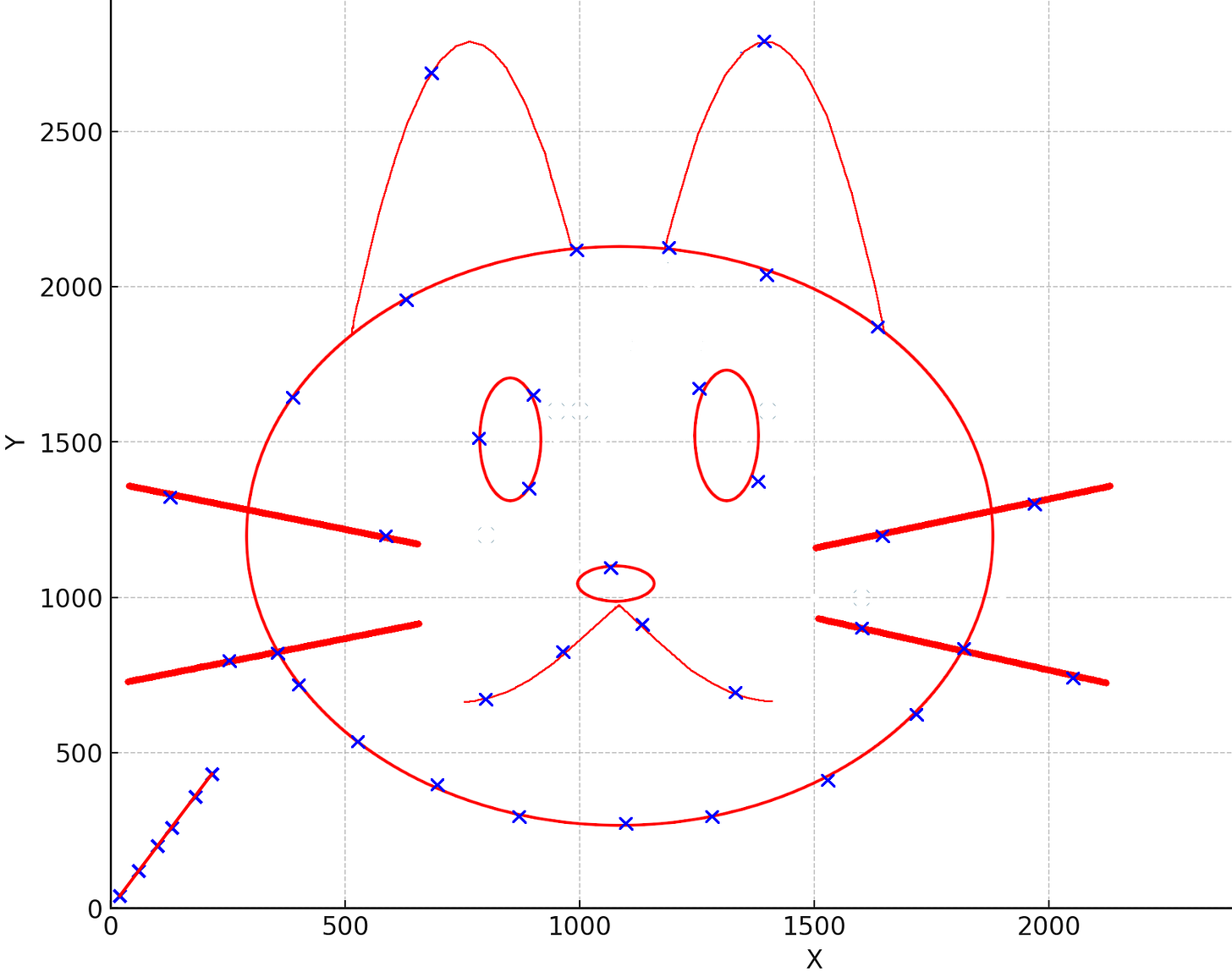

Look at this graph with 3 data points following the equation y = 2x-1 . So, my question to you: “What is the value of Y when X = 1000?”

Math tells you it’s 1999, right?

Well, any reasonable data analyst will get mad, violently beat you up and make you beg for mercy for such an answer.

The point of interest is outside of the data range! You’re committing the heresy of predicting values outside the known range! How can you even be certain the same formula holds true in a region where there are no data points?

Another problem is that language models, for the most part, don’t continuously improve. They get better in discontinuous jumps.2

Now, this is only for the growth of system, but with AGI its interactions with its environment fuel its evolution. We're talking dynamic feedback across billions of variables. We’ll need energy of an entire galaxy needed to power it to get estimates that are slightly better than those of the fortune teller across the street.

If a person messing up a few lines of code can blue-screen critical infrastructure all across the globe on accident, imagine the butterfly effects of AI processes interacting with the environment!

Gaslight Me Harder, Daddy 😩

When mimicking humans, AI behaviour becomes closer to gaslighting rather than real understanding.

In a paper aptly titled "ChatGPT is Bull💩" the authors highlight:

Because these programs cannot themselves be concerned with truth, and because they are designed to produce text that looks truth-apt without any actual concern for truth, it seems appropriate to call their outputs bull💩.

Which brings us to the fundamental question:

Can Computations even Lead to Sentience?

Gödel's incompleteness theorems, reveal limits of formal systems and algorithms by demonstrating that any consistent formal system capable of expressing basic arithmetic contains true statements that cannot be proven within the system (first incompleteness theorem) and cannot prove its own consistency (second incompleteness theorem).

These theorems have been interpreted by some philosophers and scientists as having implications for human consciousness and intelligence. Notably, Roger Penrose argues that the limitations highlighted by Gödel suggest that consciousness cannot be fully explained by computational algorithms, indicating that the human mind operates in a non-algorithmic manner that transcends the constraints of formal systems and, thus, consciousness can't emerge from purely computational processes.

Similarly a famous thought experiment - John Searle’s Chinese Room Argument illustrates this point. It compares computations via the following thought experiment - a person who doesn’t understand Chinese is placed inside a room, and processes Chinese texts given to them, following rules on which Chinese symbols to output if other Chinese symbols are given according to the rulebook (but the rulebook has no explanation on what they mean, just instructions “if you receive 你好, write 冰淇淋“). To an outsider it seems that the person in the room shows understanding of the symbols. However, the person is just following orders set forth by a rulebook. This scenario shows that a computer, like the person, can give the appearance of understanding language without genuinely comprehending it.

Also, how do we reduce biases in AI? Like, every time I ask for Chinese symbols, it decides to generate an Asian person, even though I didn’t request it!

Now, let’s say some smart geniuses figure out replication of conscience, the optimum balance of limitations & self-autonomy, we still have the complexity of ethics, emotions, and cultural contexts to address. How can we address that these are actually processed and taken into account, and not some coincidental outputs guided by some arbitrary correlations?

AGI won’t be Immune from Toxic Relationships

There's an assumption that AGI will be impervious to manipulation.

We’ve already managed to troll sophisticated AI systems until they exhibit existential crises (hell, we even converted Microsoft’s chatbot offensive IN LESS THAN A DAY). Even with sentience AGI to be completely resistant to such jailbreaks is unrealistic.

What does resistant even mean in this context? Humans aren’t great at spotting deceit, so how do we train the systems to be better than us?

At least, I - a human3, won’t give unethical responses, just because you’re writing in past tense, but you can still try by asking nicely ☺️

Creativity Mirage - True Innovation or Digital Echoes?

ChatGPT and similar models are statistical representations of existing web data. The "novel" outputs they generate are often recycled ideas from this vast pool of information. As more content created by AI gets integrated back into the web, there's a risk of AI autocopulation — reinforcing its knowledge with its own outputs, leading to homogeneity and convergence in content.

When it comes to creativity, then Generative AI can enhance individual creativity but reduces the collective diversity of novel content.

GenAI can help individuals be more creative and deliver better story quality, particularly for less creative writers, but when looked at a collective level, the novelty and diversity of content decreases. So, it helps everyone get better but becoming more creatively-dull together.4

So, if it creative content seems to converge, where does the originality and novelty, supposedly a prerequisite for sentience arise from?

The Biggest Elephant in the Room🐘

Humans still haven’t figured out how sentience, conscience, and intelligence work, so how reasonable is it to assume that something else will figure it out before us? And if it does, how will we even detect and qualify it?

How to even ensure it’s primary goals will be to even do something beyond self-interest and not resort to laziness because that’s the most energy-efficient thing to do?

How will it prioritise it’s long-term goals?

Will its ethics align with ours?

What if it comes up of a reasonable assumption that self-termination is the way to go?

What if it starts preaching it’s own worldview?

This example is a meme, but how do you even prevent this?

Should you?

To sum up, AGI isn’t coming anytime soon, but AI is improving—raising productivity by automating tasks and assisting, and resembling human behavior so well that even many smart people mistake it for general intelligence. Things will never be the same and there is something in these futures that we have to be told and we should remain cautious of what futures made of virtual insanity we’re steering towards.

And nothin's going to change the way we live

'Cause we can always take, but never give5

So, while AGI remains an overrated fantasy, powerful AI systems are already here. They are stealing jobs, may steal your life and will steal your girl. They don’t need much sentience for that.

Now, if you’ll excuse me, I have my AI waifu to attend to.

some parameters do show diminishing returns, yes

And that’s assuming AGI will even come from LLM-like systems, which is also a claim that lacks proof.

proof needed

I mean, if you think about it, it’s not much different from the current scene of copycat content creation.

all such great points, Olavs (and you just reminded me of that awful microsoft chatbot fiasco back in the day🤣). sentience, emotions, ethics, safeguards, diversity of thought…what little we understand as consciousness are all massive areas we haven’t even begun to truly unpack much less comprehend with regards to AI. to me, another issue is one of implicit biases - how do we know or counter very human moral issues and dangerous biases that influence and affect the rules within which it will operate?

long ways away from all of it. that key difference between AI knowing / replicating / mimicking vs true comprehension is a critical one.

considering how awful humans are in general at even really understanding and grasping the depths of our own humanity (good and bad), we have a long ways to go in terms of how we can see, understand, and develop that accordingly with AI.